NFS Client Tuning on Linux

Table of Contents

In some of my previous posts, I spent some time attempting to squeeze out the best NFS performance as possible from OpenBSD. This time, I wanted to run a similar test, but on Linux and see if the same findings were applicable.

I found the results rather interesting, as they show that Linux is capable of faster transfer speeds than OpenBSD, with much less work. Of course, this doesn't make me dislike OpenBSD any less (obviously), as the OS is still capable of fast transfers, and I am not building any supercomputers at my home.

I know there are plenty of articles out there which go into great detail on achieving the best possible NFS performance. However, most of the articles only highlighted possible settings to use, but none of them offered a way for anyone to test those settings. The script at the bottom of this article should enable admins to automate the process of finding the proper NFS values for their servers.

First off, I did some research and have tweaked my original NFS testing script used in earlier articles to do away with rsync, and switch to using dd with special flags to bypass the RAM cache Linux uses. This is done to test the raw transfer speeds, and not have results skewed by buffering.

Also, RHEL7/CentOS7 recommends to use TCP, and not UDP, as read here.

For this reason, I am only running my tests using TCP. Also, when I did do some testing using UDP on the CentOS server, I saw massive speed degradation, that was not seen when using TCP.

Also, the script has been modified to run read, as well as write tests.

Top 30 Read Results:

Direction Sync Protocol Mount Buffer Transfer Size Transfer Time Transfer Speed

read sync tcp soft 131072 4096 36.5489 118MB/s

read sync tcp soft 131072 2048 18.2744 118MB/s

read sync tcp soft 131072 1024 9.13749 118MB/s

read sync tcp hard 131072 4096 36.5475 118MB/s

read sync tcp hard 131072 2048 18.2746 118MB/s

read sync tcp hard 131072 1024 9.13775 118MB/s

read async tcp soft 131072 4096 36.5473 118MB/s

read async tcp soft 131072 2048 18.2741 118MB/s

read async tcp soft 131072 1024 9.13767 118MB/s

read async tcp hard 131072 4096 36.5479 118MB/s

read async tcp hard 131072 2048 18.2747 118MB/s

read async tcp hard 131072 1024 9.13783 118MB/s

read sync tcp soft 65536 4096 36.6083 117MB/s

read sync tcp soft 65536 2048 18.3038 117MB/s

read sync tcp soft 65536 1024 9.15332 117MB/s

read sync tcp soft 32768 4096 36.6747 117MB/s

read sync tcp soft 32768 2048 18.3372 117MB/s

read sync tcp soft 32768 1024 9.17016 117MB/s

read sync tcp hard 65536 4096 36.6055 117MB/s

read sync tcp hard 65536 2048 18.3032 117MB/s

read sync tcp hard 65536 1024 9.15207 117MB/s

read sync tcp hard 32768 4096 36.6751 117MB/s

read sync tcp hard 32768 1024 9.16981 117MB/s

read async tcp soft 65536 4096 36.6052 117MB/s

read async tcp soft 65536 2048 18.3035 117MB/s

read async tcp soft 65536 1024 9.15207 117MB/s

read async tcp soft 32768 4096 36.6767 117MB/s

read async tcp soft 32768 2048 18.3374 117MB/s

read async tcp soft 32768 1024 9.16966 117MB/s

read async tcp hard 65536 4096 36.6047 117MB/s

Top 30 Write Results:

Direction Sync Protocol Mount Buffer Transfer Size Transfer Time Transfer Speed

write sync tcp soft 65536 1024 12.7614 84.1MB/s

write sync tcp soft 32768 4096 51.0877 84.1MB/s

write sync tcp soft 32768 2048 25.5335 84.1MB/s

write sync tcp soft 32768 1024 12.7988 83.9MB/s

write sync tcp soft 131072 2048 25.6036 83.9MB/s

write async tcp soft 32768 2048 25.5911 83.9MB/s

write async tcp soft 32768 1024 12.8041 83.9MB/s

write async tcp hard 65536 2048 25.5911 83.9MB/s

write async tcp hard 32768 2048 25.5999 83.9MB/s

write sync tcp hard 65536 2048 25.6387 83.8MB/s

write async tcp soft 32768 4096 51.2749 83.8MB/s

write async tcp hard 32768 4096 51.2585 83.8MB/s

write sync tcp soft 65536 2048 25.6436 83.7MB/s

write sync tcp hard 65536 4096 51.3207 83.7MB/s

write async tcp hard 65536 1024 12.8344 83.7MB/s

write async tcp hard 65536 4096 51.3744 83.6MB/s

write sync tcp soft 65536 4096 51.4253 83.5MB/s

write sync tcp hard 65536 1024 12.8609 83.5MB/s

write sync tcp hard 32768 4096 51.4328 83.5MB/s

write async tcp hard 32768 1024 12.8662 83.5MB/s

write sync tcp hard 32768 1024 12.8715 83.4MB/s

write sync tcp hard 131072 2048 25.7637 83.4MB/s

write async tcp soft 131072 4096 51.5276 83.4MB/s

write async tcp soft 65536 1024 12.8935 83.3MB/s

write sync tcp hard 131072 1024 12.9163 83.1MB/s

write async tcp soft 65536 2048 25.8834 83.0MB/s

write async tcp hard 131072 1024 12.9441 83.0MB/s

write async tcp soft 65536 4096 51.8221 82.9MB/s

write async tcp soft 131072 1024 12.9866 82.7MB/s

write async tcp hard 131072 2048 26.0415 82.5MB/s

Interpretations

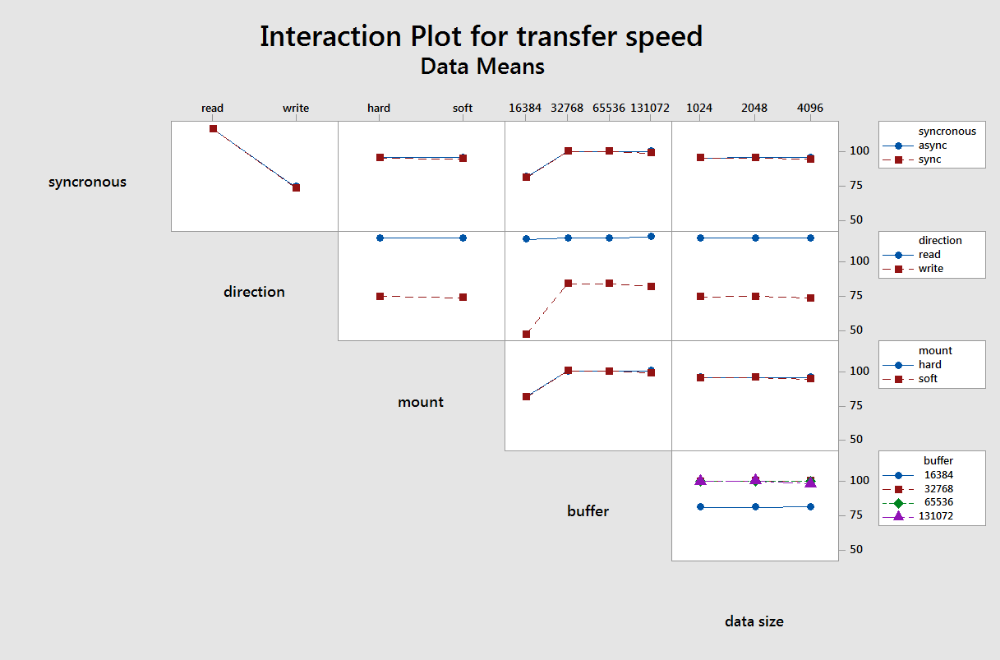

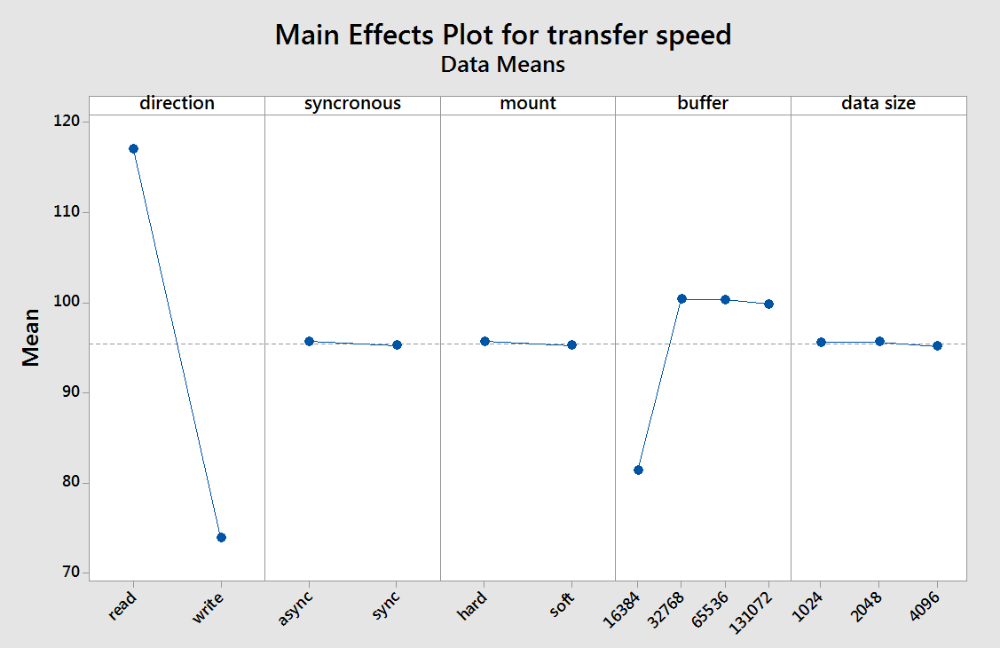

This go-around, I ran the results through Minitab, which was able to generate these plots for further analysis, and yes, synchronous is misspelled:

My interpretation of this plot is that Linux is overall very good at transferring data over NFSv3 with a majority of settings. As long as the buffer size was larger than 16384, the transfer speeds were pretty consistent for both reading and writing.

My comments for this plot echo what was said regarding the previous plot. A majority of settings have little affect on the speed on NFS. It does look here that async and hard settings yielded slightly higher results, but barely.

Here is the script that was used to generate these results:

#!/bin/bash

# 1. sync vs async

# 2. TCP vs UDP, ignoring since UDP sucks in my tests on linux

# 3. soft vs hard

# 3. r/w size: 16384 32768 65536 131072

######################

### SET THESE VARS ###

######################

# Log location

log=/tmp/nfstest.log

# NFS share to mount

share=<server>:/tank0/nfs/share

# Mount location

mnt_dir=/data

########################

### script continues ###

########################

# Standard nfs options

standard='nfsvers=3,'

data[0]=1024 # 1G

data[1]=2048 # 2G

data[2]=4096 # 4G

# Declare starting point in log

echo "protocol,synchronous,mount,buffer,data size,direction,transfer time,transfer speed" >> $log

for protocol in tcp udp

do

echo "$(date) - Using $protocol"

protocol=${protocol},

for synchronous in async sync

do

echo "$(date) - Using $synchronous"

synchronous=${synchronous},

for mount in hard soft

do

echo "$(date) - Using $mount"

mount=${mount},

for size in 16384 32768 65536 131072

do

echo "$(date) - Using a $size byte r/w buffer"

# Unmount share

echo "$(date) - Remounting share"

sudo umount $mnt_dir >/dev/null 2>&1

sudo mount.nfs -o ${synchronous}${protocol}${mount}${standard}rsize=$size,wsize=$size $share $mnt_dir

for e in 0 1 2

do

echo "$(date) - Using ${data[$e]}MB"

for direction in write read

do

# Transfer file

if [[ $direction == "write" ]]; then

echo -n "$(date) - Writing data: "

# Write the direct with no caching

action=$(dd if=/dev/zero of=${mnt_dir}/zeros.file bs=1M count=${data[$e]} oflag=direct 2>&1)

# Write while utilizing cache

# action=$(dd if=/dev/zero of=${mnt_dir}/zeros.file bs=1M count=${data[$e]} 2>&1)

# echo transfer info

action_thr=$(echo $action | awk '{print $14$15}')

action_time=$(echo $action | awk '{print $12}')

echo "$action_thr"

else

echo -n "$(date) - Reading data: "

action=$(dd if=${mnt_dir}/zeros.file of=/dev/null bs=1M 2>&1)

# echo transfer info

action_thr=$(echo $action | awk '{print $14$15}')

action_time=$(echo $action | awk '{print $12}')

echo "$action_thr"

fi

data_time=$action_time

data_trans=$action_thr

# Sync

echo "$(date) - syncing"

sync

# Save transfer info to log

echo "${direction},${synchronous}${protocol}${mount}$size,${data[$e]},${data_time},${data_trans}" >> $log

done

done

# Unmount share and get duration

echo "$(date) - Unmounting share"

umount_time=$({ time -p sudo umount $mnt_dir;} 2>&1 | grep real | awk '{print $2}')

done

done

done

done

Server Notes:

NFS Server: OS: FreeBSD 12.0

CPU: Intel® Xeon® CPU E3-1275 v6 @ 3.80GHz (3792.20-MHz K8-class CPU)

Network: 4 1Gbps igb NICs bonded using LACP

NFS Client OS: CentOS7

CPU: Intel® Core™ i3-6100 CPU @ 3.70GHz, 3701.39 MHz, 06-5e-03

Network: 4 1Gbps NICs bonded using LACP

Has been tested on Centos7